GitHub’s command line and AI-powered co-pilot suggest feature is a great combination.

I know I can use variables and loops and wget to grab a bunch of URLs that I want to save. But I don’t do this often enough to remember all the syntax nuances.

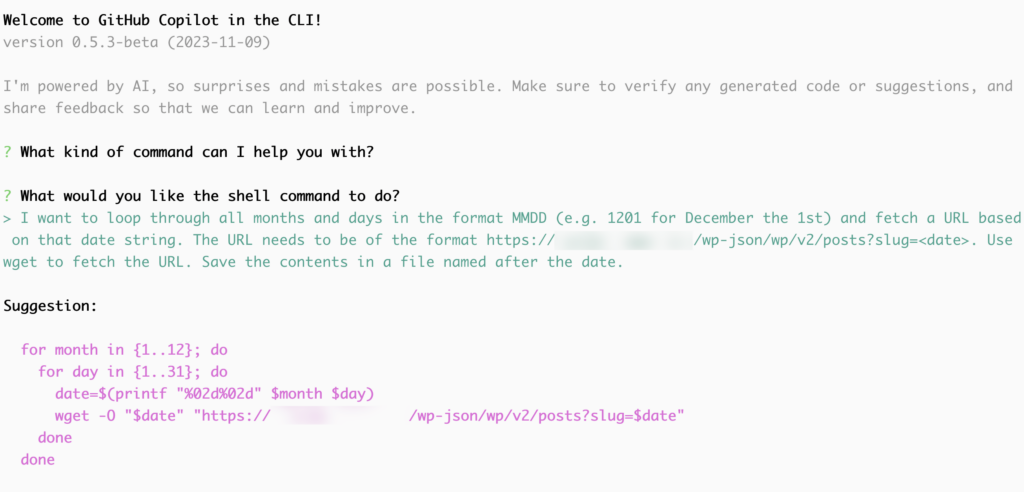

So let’s ask copilot:

I want to loop through all months and days in the format MMDD (e.g. 1201 for December the 1st) and fetch a URL based on that date string. The URL needs to be of the format https://somewebsite.com/wp-json/wp/v2/posts?slug=<date>. Use wget to fetch the URL. Save the contents in a file named after the date.

Note: I’m doing this to a free-to-view site that has arguably-open material and that has not been updated for YEARS to generate an interface to it entirely for my own use. I consider this fair use of the content. I am NOT re-publishing copyrighted work.

I really feel like this is where AI works well: as a productivity tool to boost human productivity, not as a replacement for human productivity.

In this case, removing friction from obtuse command-line stuff.

Next it will help me generate some code to fetch this saved data from JavaScript without me needing to look up the docs. It’s great.

Yesterday I tried ChatGPT’s voice chat interface. That was amazing too.

I do feel like a bunch of important and useful websites don’t get hits and kudos and advertising bucks when I use this way of finding out things. We need to sort that. This tech is amazing. But gosh I can see how it breaks the web.