Conversations about Code

I’ve had a couple of great conversations lately about coding, languages and teaching people to code. The latter conversation was on the back of CodeAcademy launching an “Hour of Code” app that aims to teach the basics of programming to anyone with a smartphone (iOS only at present, I believe, sorry!).

Now, I actually think CodeAcademy is a great resource with a great mission: making learning to code easy! But, I think CodeAcademy’s approach, and in particular, the focus on JavaScript (which is, in my opinion, a bad language to learn to code in), isn’t great. And the app version has some serious flaws.

(I’m keen to point out at this point that this is opinion, and that I have a particular, very formal background in software engineering on which those opinions are based. Other opinions may also be valid!)

Examples

To demonstrate these flaws, I had a conversation with someone older than me who’s done no coding. I explained, in very basic terms, a particular aspect of why I dislike JavaScript as a language to learn to code. It went something like this.

In coding, there are different types of things. There is the number 2, and there is the text representing the number 2.

The number two is written like this:

2

This is actually a number.

Here is another number:

4

You can do maths with numbers. So you can add numbers together:

2 + 4 = 6

Now. In most programming languages you can also write 2 with quotes around it, like this:

“2”

This is not a number. This is called a “string”. Strings are just text – they can contain any symbols: letters, numbers, dollar signs, etc.

It’s like the old “Ceci n’est pas une pipe” thing. Magritte’s painting of a pipe. It’s not actually a pipe, it’s just a painting of a pipe. This is not the number two, it’s a textual version of the number 2.

We can have a string with a letter in:

“a”

Or we can have a string with several letters and/or numbers:

“abc123”

And, conveniently, you can join strings together. Oddly, in some languages, you use the + sign to do this:

“abc” + “123” = “abc123”

So. Let’s do some “addition” and see what we get.

2 + 4 = 6

but

“2” + “4” = “24”

remember these have quotes around them and so they are strings, not numbers. + joins them together, rather than adding them. So we can also do:

“2” + “a” = “2a”

Hope you’re still with me.

To the conundrum then. What is this?:

2 + “a” = ?

When I put this to said older person who has no coding experience he said “It’s not allowed”.

And, in my opinion, he’s right. This shouldn’t be allowed. It should print an error message and force you to correct it.

There are other opinions. Some would say that, clearly, this should be:

2 + “a” = “2a”

In this case it’s assumed that the language will convert the number 2 from a number into a string and then do the joining.

But assumptions are bad. If you assume that then do you assume the same thing happens with two numbers?

2 + 2 = “22” ?

Clearly that’s an exaggeration, because it’s so simple. The intention there is clearly to do addition.

But what about something even ever-so-slightly more complex like:

2 + 2 + “a” =?

Is this:

2 + 2 + “a” = 4 + a = “4a”

or

2 + 2 + “a” = 2 + “2a” = “22a”

Note to coders: yes, we can learn something from trying this. We can learn type casting/coercion, and we can learn operator precedence. My point is that we shouldn’t have to. Code should be clear and meaningful, not ambiguous. We should teach people to understand the code that they write, and it’s much easier to teach that if the code that they write is correct and meaningful.

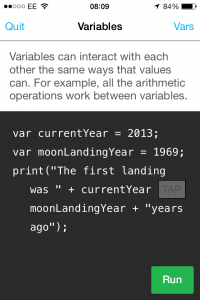

So it kind of pained me to see an ambiguous example of hugely ambiguous code in CodeAcademy’s app. The image below is a screen shot:

Here’s the code (I’ve filled in the minus symbol that is the correct answer to the question):

<span style="line-height: 1.5;">var currentYear = 2013;</span>

var moonLandingYear = 1969;

print("The first landing was " + currentYear - moonLandingYear + " years ago");

This has introduced an extra concept: the = sign is used to save a value. So when you see “var currentYear = 2013” that means “store the value 2013 using the name currentYear”. The code can then get the value 2013 back by asking for currentYear. Note that currentYear and moonLandingYear are numbers, not strings; they are 2013, not “2013”.

Now, this code example isn’t JavaScript, but it looks a lot like it. The question is, what gets printed? What is the result of “adding” the combination of strings and numbers:

"The first landing was " + currentYear - moonLandingYear + " years ago"

Option 1: Assume that the subtract is done first and the resulting number automatically converted to a string and joined with the rest

"The first landing was " + currentYear - moonLandingYear + " years ago" = "The first landing was " + 2013 - 1969 + " years ago" = "The first landing was " + 44 + " years ago" = "The first landing was 44 years ago"

This seems correct. But is it what the code actually does? There’s another possible interpretation.

Option 2: Assume that we process the + symbols from left to right (as you do in maths), converting numbers to strings as you go:

"The first landing was " + currentYear - moonLandingYear + " years ago" = "The first landing was " + 2013 - 1969 + " years ago" = "The first landing was 2013" - 1969 + " years ago" = ....?

Oh.

Hang on.

We know that the plus sign joins two strings together. But what does the minus sign do with a string?

It turns out that not only is this a really bad code example. But if you convert it into actual JavaScript you don’t get the intended result. You get the rather odd output of “NaN years ago”. This is because a string minus a number is a special thing called “Not a Number”, or “NaN” for short.

Here it is in practice: http://jsfiddle.net/8Xs4Q/ – you can click the “Run” button at the top as many times as you like to run the code, which is in the bottom-left box, and see what gets printed in the pop-up box.

Why is this so bad?

Well, aside from the fact that CodeAcademy’s examples are broken…

JavaScript is a neat, easy-to-use, very forgiving language. In some ways it’s ideal for learning to code because it’s installed on pretty much everyone’s PC as part of their web browser. Just hit the F12 key, click “Console” and you can write JavaScript. And in the right hands it’s a very powerful language too. I don’t deny these things.

But!…it’s SO easy to write the wrong code in JavaScript. It doesn’t tell you when you’ve made a basic error. YES, yes you CAN learn by making mistakes and finding out what you did wrong. But is it not better to teach people not to make those mistakes in the first place? To use a language that points out your mistakes clearly rather than one which makes assumptions about what you mean and carries on regardless?

Code should be easy to understand, it should communicate the correct meaning to both the computer AND the reader. The reader shouldn’t need a detailed understanding of operator precedence and type coercion to understand what’s going on in their first ever hour of coding.

I’m not a perfect coder – no one is. I don’t do all the things in code that I think people should do all the time. But real-world projects have contraints that mean sometimes you have to work a bit quicker, deliver something to a deadline or budget.

But I do think we should teach people to write code that’s meaningful and understandable. We should teach people to write code where it’s clear what the code does, not dependant on assumptions about the language or some deeper understanding. It’s my opinion that neither JavaScript nor CodeAcademy’s new app are good tools for doing that. Which is a shame, because I LOVE the CodeAcademy concept. Heck, if you’re interested in learning to code, it’s better than anywhere else to get self-taught. Ignore my ranting and go and do something…errr…interesting?